Generative AI for Defensive Cybersecurity Practitioners

Abstract

This paper is an introduction for defensive cybersecurity practitioners and blue teams to Generative Artificial Intelligence (AI). Although the cybersecurity conversation about generative AI tends to focus on abuses, vulnerabilities, and offensive security use cases, the technology is being rapidly incorporated both into the systems blue teams defend, and the tools they use to do so.

First, we introduce cybersecurity practitioners to generative AI terminology and concepts. Second, we show practitioners how to get started with free and minimally paid resources. Finally, we examine use cases for generative AI in defensive cybersecurity, and how to assess which ones need more tuning or adaptation.

Introduction

Artificial Intelligence (AI), or the ability of computer systems or algorithms to imitate intelligent human behavior1, is a broad field that includes many subfields, including generative AI. Generative AI is a subset of AI that focuses on creating new content. Large Language Models (LLMs) are a type of AI that are trained on large amounts of text data to understand and generate human-like text. The most well-known example of this is ChatGPT, released by OpenAI in November 20222. The release of ChatGPT provided a free, app-based interface to an extremely powerful LLM, sparking the free release of competitors such as Google Bard, Microsoft Copilot (formerly Bing Chat Enterprise), and Anthropic Claude.

ChatGPT and the associated General-Purpose Transformers (GPT3.5 and GPT4) models are based on large neural networks, which are not especially new to the Machine Learning world3. However, these GPTs are uniquely capable of boiling down human language and intent, including which aspects of the input to focus on4. Further, because they are trained on chat-based datasets, they accommodate nudging, correction, and two-way dialogue2.

GPT models break down their massive amounts of training data and input from the users into tokens, and probabilistically generate the next token (a word or part of a word) in their responses, based on their training data. When the models are working as intended, this is usually the next logical word in a sentence to form a coherent response. GPT3.5’s training data is as recent as September 2021, which is the same as the base GPT4 models, but more recent versions of GPT4 include training data as recent as April 202356. However, these models do sometimes demonstrate knowledge of events beyond these timeframes, either because of finetuning by the hosting technology provider, or because of implementations which allow retrieval of live web content such as ChatGPT Plus and Microsoft Copilot.

The most common means to guide ChatGPT and similar models is called “Prompt Engineering” wherein a user specifies a task and and manner they want the model to respond7. These can be simple and silly for creative tasks (“talk like a Pirate!”) or much more instructional including specifics on formatting such as JSON8. Because these models do not typically have access to live data, they need to add data to the model “context window” either via the prompt itself, example inputs and outputs called “few shot” prompting, by encouraging reasoning 9, and even the use of external tools which can be bundled by frameworks such as LangChain10.

Perhaps the most important facts to understand about generative AI for cybersecurity use cases are 1) the training data cutoff, and 2) which data is available to the model in context. This is because the models attempt to probabilistically guess the next token, which makes them prone to simply inventing information, known as a “hallucination.” Security use cases often involve researching live, up-to-the-minute information, such as a phishing domain registered in recent days, or the associated logs and alerts within a SIEM or SOAR platform. If this data is not explicitly pulled into the model’s context, generative AI may generate convincing statements based only on its older training data which can mislead analysts and their security investigations. Techniques such as Retrieval Augemented Generation (RAG), which is discussed in the next section, as well as prompt engineering can be used to counter these tendencies.

Getting Started With Generative AI

When it comes to getting started, large technology companies offer the best capability for value. However, because the offerings are controlled by those companies there is no guarantee of privacy, so caution is needed with respect to any sensitive data being provided. I recommend the following free or minimally paid resources, noting their limitations:

| Provider | Product | Cost | Model | Token Context Window | Message Limit Per Conversation | Other Details |

|---|---|---|---|---|---|---|

| OpenAI | ChatGPT | Free | GPT3.5-turbo | Unlimited | Unlimited | - |

| OpenAI | ChatGPT Plus | $20/month | GPT4 | 8,000 tokens | Not specified | Access to API. |

| OpenAI | OpenAI Platform | Pay-as-you-go | GPT4 | Up to 128,000 tokens | None (pay as you go) | Robust APIs. |

| Microsoft | Copilot | Free | GPT3.5, GPT4 | 2,000 or 4,000 characters | 5 | nan |

| Microsoft | Copilot | $12+/month | GPT3.5, GPT4 | 2,000 or 4,000 characters | 30 | nan |

| M365 Business Standard License or better | ||||||

| Bard | Free | Gemini | Unlimited | Unlimited | Bard Chat. | |

| Anthropic | Claude 2.1 | Free | Claude | 100,000 tokens | ~20 messages every 8 hours | Allows document upload |

| Anthropic | Claude Pro | $20/month | Claude | 100,000 tokens | ~100 messages every 8 hours | nan |

Because of the privacy and data sharing concerns with these models, it should be noted that there are self-hosted alternatives. Large language models are typically massive in size, requiring similarly scaled infrastructure to load and run. However, some permissively licensed models with less input parameters can be reduced in size to run on commercial grade graphics processing units (GPU) or even central processing units (CPU) by using a process known as “quantization” to reduce model size11. This comes at the cost of performance in both speed and accuracy but offers more privacy and customization within the limits of the consumer hardware available. These are some frameworks I recommend:

| Project | Models (Common) | Notable Details |

|---|---|---|

| PrivateGPT12 | Mistral 7B Derivatives13 | OpenAI-Compatible API and support for other LLMs with the same14 |

| RAG via “Query Docs” | ||

| GPT4All Chat15 | Mistral 7B Derivatives | Offers Desktop App with GUI Download of Models |

| Falcon 7B16 | ||

| Mistral 7B OpenOrca17 | RAG via “LocalDocs” |

Both options above provide patterns for an extremely common use case of Generative AI: asking natural language questions of local documents or a knowledge base. This was not listed above as a unique cybersecurity use case, but certainly has value for network defenders to search internal documentation. Both implementations by PrivateGPT and GPT4All rely on a technique known as Retrieval Augmented Generation (RAG) which pulls relevant information from a vectorized knowledge into the model’s context window. This allows the model to answer questions based on the most up-to-date information from the knowledge base, without retraining the entire model.18

Cybersecurity Use Cases

Considering their capabilties and limitations, some of the most promising use cases of Generative AI for cybersecurity purposes are:

-

Incident Reporting, Summarization, and Communication

-

Code Analysis and De-obfuscation

-

Playbook / Runbook Generation and Prototyping

Incident Reporting, Summarization, and Communication

Defensive cybersecurity analysts spend a great deal of time consolidating technical and contextual information to communicate with analysts and stakeholders during a cybersecurity investigation. This includes times when new analysts are added to an investigation, handover between shifts, and requesting assistance from application and infrastructure teams. After-action reporting may also be required for internal or external parties, depending on the outcome of the investigation and severity of the incident. All of this takes time and can introduce cognitive overhead due to context switching.

Summarization use cases are one in which LLMs generally excel, like the everyday use case of summarizing news articles, where studies have found performance of recent models to be on par with human freelance writers19. Cybersecurity analysts are not generally professional writers, which implies even more benefit could be gained. Additionally, these models performed significantly better with “multi-shot” prompting, meaning they were supplied multiple examples before generation. This is contrasted with “zero-shot” prompting which relies on no examples in context and is reliant on the model’s training and fine tuning. Any Security Operations Center (SOC) which investigates more than a 1-2 cases per week should have a reasonable corpus of well-written summaries for these examples.20

To incorporate more technical data, some cybersecurity tools such as the malware analysis sandbox engine Any.Run have already introduced summarization mechanisms based on OpenAI to distill the outputs of malware analysis into summaries which can be copied by analysts into chats, handovers, case documentation, and other reports21. As a more comprehensive example, Microsoft’s Security Copilot seeks to address the overall summarization task. At the time of this writing, Security Copilot is not yet General Availability (GA), however it’s current documentation notes: “Security Copilot can swiftly summarize information about an incident by enhancing incident details with context from data sources, assess its impact, and provide guidance to analysts on how to take remediation steps with guided suggestions.”22

Code Analysis and De-obfuscation

One of the most time-consuming tasks for cybersecurity analysts, especially during a time sensitive investigation, is code analysis. “Reverse engineering,” or the art of inferring program function from compiled or packaged artifacts has become its own discipline within cybersecurity. This discipline is typically reserved for more experienced analysts. However, in today’s threat landscape this art has become more of a part of triage, typically conducted by less experienced analysts.

Malware authors have increasingly migrated towards “living off the land” techniques which abuse built-in scripting languages such as PowerShell, JavaScript, and Visual Basic. These scripts may be the start of an initial access attempt or be intermediate stages in a chain of events such as a Visual Basic macro which downloads and executes a PowerShell script which ultimately downloads and executes a compiled executable. Depending on the configuration of EDR and Antivirus products, these may be either blocked or detected, triggering a security investigations for triage analysts to determine what happened and whether action should be taken. Tools such as Security Copilot also include this functionality 23.

Another obfuscation example is the delivery chain for NetSupport RAT (Remote Access Tool) which is abused by cybercriminals to gain control over end user devices. Recently, the actors have used a mechanism such as drive-by downloads and fraudulent update sites to kick off an execute obfuscated JavaScript, followed by PowerShell, which downloads a .zip file containing the NetSupport executable. The JavaScript and PowerShell stages use extraneous commands and base64 encoding, respectively, to challenge analysis by humans and detection engines24. In this sample which has a size of less than 6KB and uses the extraneous comments obfuscation technique, relevant excerpts of the JavaScript (and embedded PowerShell) can be sent to an LLM which neatly surmises the function. However, it should be noted that the function including the extraneous comments were greater than the 4,000 character limit for Copilot, and ChatGPT 3.5 refused to analyze the file in at least one instance. To view the analysis from these models, the analyst needed the skill to identify the key function and paste that into the interface, which makes the value of LLMs less evident.

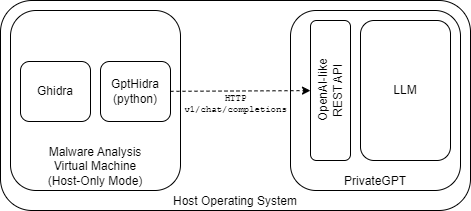

Another generative AI tool that is more useful for traditional reverse engineering compiled code is GptHidra25, a plugin for Ghidra26 which uses the OpenAI API to generate chat completions using the simple prompt: “Explain code: {Ghidra decompiled C function}.” Because the functions within Ghidra are typically smaller and less obfuscated than the JavaScript or PowerShell payloads, this will not commonly hit the context window limits. However, as noted in Figure 2, models such as GPT4 have token limits of up to 128,000 tokens, and gpt-3.5-turbo-1106 has a limit of 16,3855. This assumes the analyst is permitted to use these internet-hosted models at all, since reverse engineering and malware analysis commonly take place in isolated environments. In these cases, frameworks such as PrivateGPT can be adapted to use GptHidra with minimal effort due to their offerings of an “OpenAI-like” Rest API12. Efficacy testing of these models versus OpenAI and Microsoft models is out of scope for this paper but an area for future exploration.

Playbook / Runbook Generation and Prototyping

Cybersecurity analysts in modern SOCs tend to use procedural documents or “runbooks” for repeatability of tasks and consistency of analysis. These may be stored in wikis or other team-accessible repositories such as a file share, and in recent years, these runbooks are increasingly starting to take the form of SOAR (Security Orchestration Automation and Response) playbooks. SOAR systems are designed to integrate with Security Information and Event Management (SIEM) systems and may even be considered a sub-feature rather than a dedicated system depending on the product line and nature of the network they are deployed in27.

Standards such as CACAO 2.0 aim to provide a common framework for these SOAR playbooks28 but in practicality there has been limited uptake amongst SOAR vendors for import to their tools, and organizations tend to author playbooks in their language and technology of choice. Three notable examples where these public but custom-formatted repositories exist are Palo Alto Networks’ Cortex XSOAR29, Splunk’s Phantom30, and Microsoft Sentinel31. Respectively, these are written in a custom YAML format32, Python, and a custom JSON format. The common factor in most SOCs is written procedural documentation from which these are generated.

There are potentially immense time savings by prototyping these playbooks using Generative AI based on written documentation. Accelerating time to testing reduces analyst and developer hours spent on learning bespoke SOAR playbook syntaxes or generating boilerplate code33. Based on my experience, most organizations start by developing playbooks for their most common use cases such as phishing or antivirus alerts, which save large amounts of analyst time by automating large numbers of alerts and thus justifying the development hours spent on SOAR playbooks. However, it is more difficult to justify expending the same level of effort writing SOAR playbooks which automate alerts seen less frequently by the SOC. Another important consideration is keeping playbooks and their subroutines up to date with changes in procedures. These minor changes are most likely to be reflected in written documentation and Generative AI may be used to quickly develop adapted subroutines to add into the larger SOAR playbook.

Conclusion

Generative AI is promising for cybersecurity use cases if a practitioner knows its capabilities and can apply it in tandem with other tools and pipelines. Generative AI can also be a dangerous technology for analysts to rely on if they do not understand the technology’s limitations. The paper has explored several use cases specific to the defensive cybersecurity domain, which practitioners can use as starting points in their research or when actively defending their networks. Regardless of our specific cybersecurity applications, having a basic understanding of the technology and infrastructure is increasingly important so we can properly secure and defend it.

-

https://www.merriam-webster.com/dictionary/artificial+intelligence ↩

-

https://writings.stephenwolfram.com/2023/02/what-is-chatgpt-doing-and-why-does-it-work/ ↩

-

https://platform.openai.com/docs/models/gpt-3-5:text/html ↩ ↩2

-

https://platform.openai.com/docs/models/gpt-4-and-gpt-4-turbo ↩

-

https://platform.openai.com/docs/guides/text-generation/json-mode ↩

-

https://python.langchain.com/docs/get_started/introduction ↩

-

https://towardsdatascience.com/quantize-llama-models-with-ggml-and-llama-cpp-3612dfbcc172 ↩

-

https://docs.privategpt.dev/manual/advanced-setup/llm-backends ↩

-

https://any.run/cybersecurity-blog/chatgpt-powered-analysis-method/:/cybersecurity-blog/chatgpt-powered-analysis-method/ ↩

-

https://learn.microsoft.com/en-us/security-copilot/microsoft-security-copilot ↩

-

https://learn.microsoft.com/en-us/security-copilot/investigate-incident-malicious-script ↩

-

https://blogs.vmware.com/security/2023/11/netsupport-rat-the-rat-king-returns.html ↩

-

https://www.oasis-open.org/2023/12/06/cacao-security-playbooks-v2-blog/ ↩

-

https://github.com/Azure/Azure-Sentinel/tree/master/Playbooks ↩

-

https://applied-gai-in-security.ghost.io/revolutionizing-cybersecurity-merging-generative-ai-with-soar-for-enhanced-automation-and-intelligence/ ↩